Blocking Casting Call Chaos with Amazon Rekognition

Wannabe actors aren’t known for their discernment, or their professionalism. Every casting director has to go through the time-wasting rite of passage, aka The Shitshow, when they put out a casting call. Everyone wants to be famous.

You’re looking for males with the age appearance of 25–30, short hair, corporate type, dark featured, slim build, and so on. Out goes the notice.

Welcome To The Weekly Disaster

4 hours later, there’s 800 emails (if you’re still mad enough to do it that way), all lined with exactly what you weren’t asking for: women, children, animals, group shots, broken files, 14MB family photos.

Every actor on the list has sent you their resume. AGAIN. They haven’t read the notice, and just replied as if the doctor was hitting their knee with a medical hammer. You were specific. You were targeted. But the mob descended, complete with a landslide of the same stupid questions (“will we need to act?”)

Casting is, in effect, a sophisticated form of crowd control.

If you’re using a management system, you can do a crude form of profile-matching, where a user is prevented from applying if their demographic data doesn’t match your criteria. But it still doesn’t check what or whom the image actually is of.

Amongst these systems are:

- Casting Manager (https://castingmanager.com/)

- SkyBolt (https://www.skybolt.net/)

- Casting Crane (https://www.castingcrane.com/)

- Casting Frontier (https://castingfrontier.com/)

- AgencyPro (https://www.agencyprosoftware.com/)

- Audition Magic (http://www.auditionmagic.com/)

- CastaSugar (https://www.castasugar.com/)

Most of these simple automate an existing process which is appallingly bad. The actual problem of casting is one of human discernment and discrimination, based on a personal briefing from the director. The problem-behind-the-problem is the sheer amount of time wasted just filtering what is not relevant.

This is also an enormous problem for actors: if the casting director is spending 99% of their time just decreasing the signal/noise ratio of the pipeline, the time they could have to spend on experimenting and considering the wildcards is entirely gone.

As a start, these platforms can, — as any web app has the ability to — filter out a photo too big (8000 pixels), in the wrong format (BMP), or which is a duplicate (checksum, filename, content etc). Not that many of them do (cough, Actors Access, Backstage, cough).

The Monster Mutates Once Again

Since the 1980s, the defacto facial recognition library in use for most applications has been OpenCV (https://opencv.org/), which stands for “computer vision”. It can detect faces, and puts the square lines around people’s heads on social media when a photo is uploaded.

Which is where the spooky Minority Report-esque Orwellian horror show tool Rekognition (https://aws.amazon.com/rekognition/) can come in very handy. We can make it learn.

Sometimes it can obviously be more valuable to bump into what you weren’t looking for, which is self-evident. Casting is always going to be a matter of human judgment. But for the purposes of avoiding the shitshow, and in the name of science, we’re going to apply a little unfairness.

Test Driving This Driverless Car

For our purposes, we’ll be using Backstage (https://www.backstage.com/talent/) as the source material, from the “recently uploaded” section.

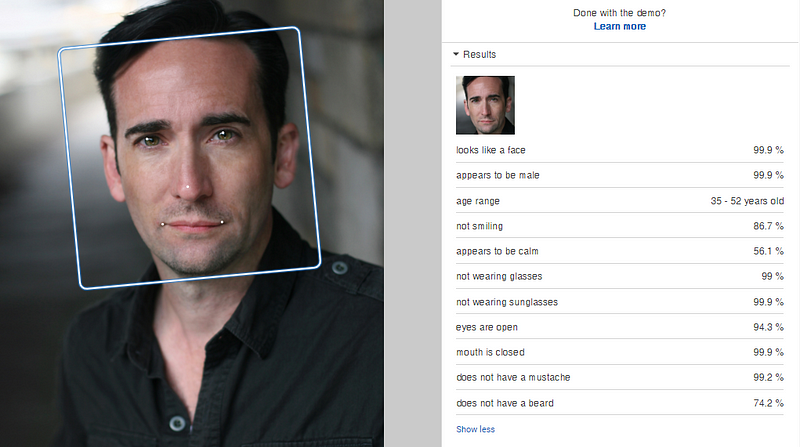

So, it contains a face (headshot), appears to be male, 35–52, does not have facial hair. If we wanted to go even deeper, we can determine his expression in the photo. According to his profile, Daniel has a playing age of 35–45.

One thing we can’t seem to tell is his ethnicity. The “everyone is a victim and everything is oppression” crazypeople are already on it, as they, of course, have time on their hands: https://www.theverge.com/2018/5/23/17384632/amazon-rekognition-facial-recognition-racial-bias-audit-data

On to the girls.

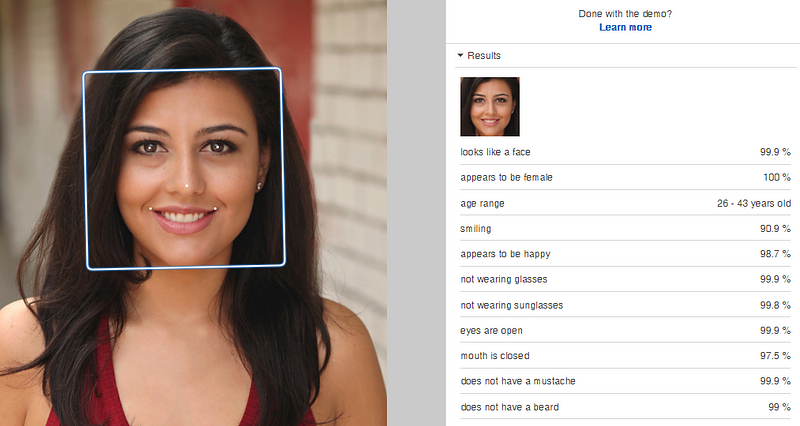

Juanita’s headshot has a female face, is happy, and smiling. Crucially, erm, she does not have facial hair. And still no ethnicity. Her acting range is 17–35, although the machine thinks 26–43 — which indicates she may be lowering her playing age by 10 years.

It should be apparent by now how this technology can be abused. For example, with the right training, we could apply scores for attractiveness, body type, and/or intent in their expression. It could be a tool of discrimination — which is essentially what casting is.

The boffins have already discovered it is possible to detect sexual orientation from facial recognition with alarming accuracy: https://www.theguardian.com/technology/2017/sep/07/new-artificial-intelligence-can-tell-whether-youre-gay-or-straight-from-a-photograph

Machine learning is, in many instances, — ironically — innately derived from what humans perceive. We know these pictures to be male or female based on the training material they have received, which has been defined by judgments or “votes” made by humans.

Rekognition, being able to detect celebrities, can apparently also decipher Bruce Jenner, who is biologically a man, but ahem, emotionally, a woman. So much for endemic oppression-bias. Backstage has a handy “transgender” tick box, if you need to screenshot you using it, for Twitter fans.

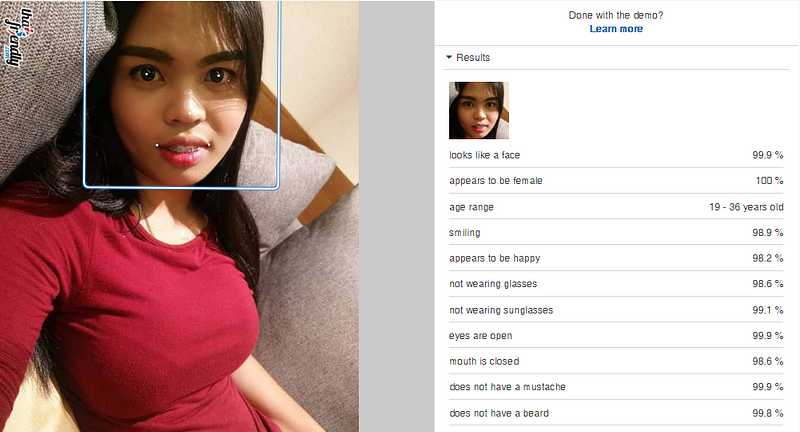

Ahaa yes, but what about a Thai ladyboy?

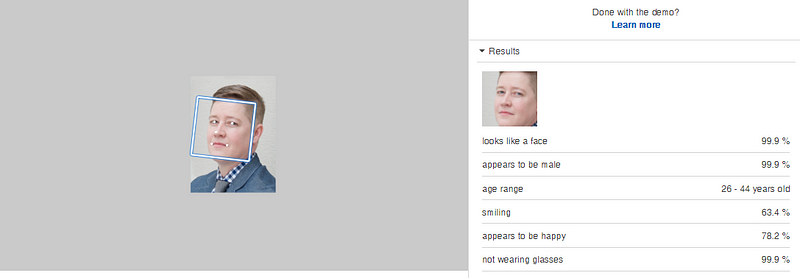

Or a butch-looking bull-dyke?

So Rekognition isn’t perfect at all, and as confused by androgyny as the rest of us. Definitely fodder for the humanities departments in university who want to waste public money on studying why people recognise “gendered” characteristics in people, such as hair length, bone structure, smiling regularity etc.

Thank You For Your Non-Contribution

That’s all great to get a pass, but it’s the non-headshots we need to weed out. Again, Backstage is handy for the wrong kind of picture to send to casting directors.

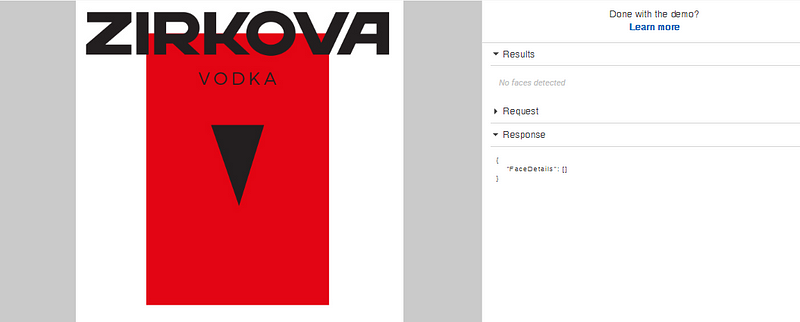

First category to knock out — does not contain a face.

Zirkova is apparently 21–91, and from Manhattan. Non-union. No facial information, so out it goes.

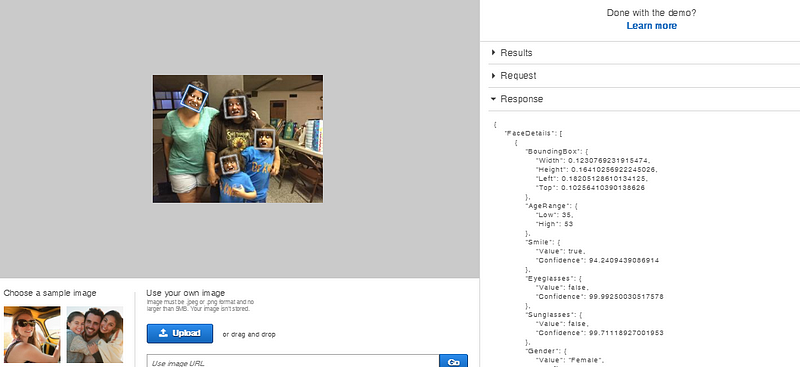

Next up, group photos. More than one face. Unless you’re casting twins.

This is where the actual data received from Rekognition needs to be interrogated slightly more. If it’s picking up multiple faces, it needs to be flagged for review.

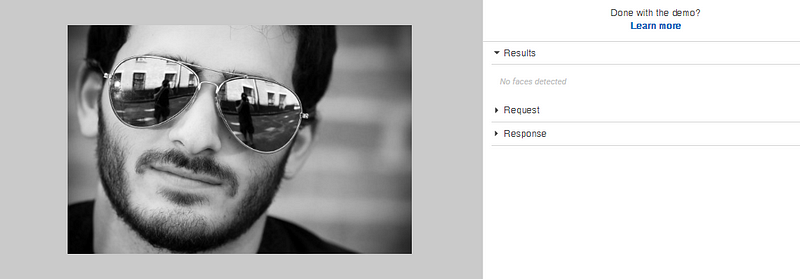

Facial coverings — sunglasses, tattoos, hijabs — don’t process well. We have to put them in the “no face” category.

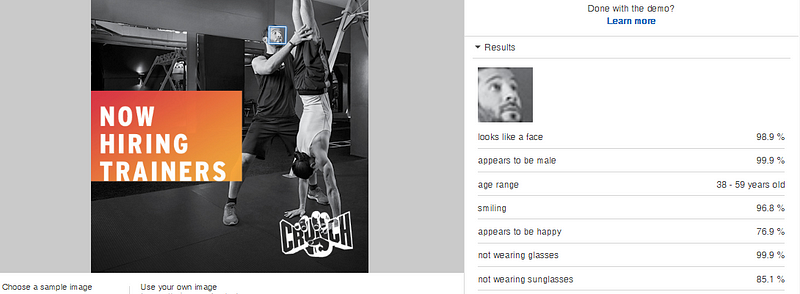

But we also have to beware of Rekognition’s sophistication, as it can generate false positives. Take, for example, this spam ad from Backstage:

For this, we can use the text recognition feature. If your image contains text, it’s out, as per the rules:

The Easy Way: Check Image Uploads in S3

If a developer has any sense, they are no longer storing uploaded files on the application server’s hard drive (local filesystem), but instead placing them CDN storage, such AWS S3 or an Azure Blob. This is definitely the easiest integration if you have some form of app you’re already using.

Let’s say you receive an image upload, it’s saved onto S3, and the “thank you” email is sent. At that point, an event can be triggered, invoking an asynchronous check of the image record in the background.

For example, in Python:import boto3

# set AWS credentials etcif __name__ == "__main__":

client=boto3.client('rekognition') # load DB model from ID in callback

database_row = Class.objects.get(pk=database_id) response = client.detect_labels(Image={'S3Object' {'Bucket':'headshots','Name':database_row.image}})

for label in response['Labels']:

print (label['Name'] + ' : ' + str(label['Confidence']))

#if label['Confidence'] < 60 #etc etc

# database_row.junk_pic = 1 # Mark row as a junk picture

# database_row.save()

This also works nicely if you are not prepared to update your code and/or give access to it, but are happy to grant it to S3 and have an API that can receive an update. You can send a trigger to a 3rd party who accesses the bucket for you, does the checking, and posts back an update to the record via your API.

Putting It All Together, Serverlessly

The trouble is twofold: a) if you’re using email-as-a-filing-cabinet, you’re not doing photo uploads, and b) if you’re using an online system, it’s hard work to integrate image recognition into the application process, unless you have external storage.

Which is where callbacks come in handy. Once your upload is stored, it will usually have an associated URL — and we can put that into a queue to check, asynchronously: if we can’t do in the process, we can ask a machine to evaluate it in the background, to save our over-worked casting director a few blades of hair.

We have a few choices to run a simple API call:

- AWS Lambda (https://aws.amazon.com/lambda/)

- Google Cloud Functions (https://cloud.google.com/functions/docs/)

- Azure Functions (https://azure.microsoft.com/en-us/services/functions/)

- IBM OpenWhisk (https://github.com/apache/incubator-openwhisk)

We want to provide a simple URL (or multiple URLs) where an application can supply the public URL of an uploaded image, score it according to their criteria, and apply the results. So we could have:

- example.com/api/male?url=http://cdn.com/upload-10.jpg

- example.com/api/female-20–40?url=http://cdn.com/upload-22.jpg

- example.com/api/headshot?url=http://cdn.com/upload-ABC.jpg

- example.com/api/characters/uncle-jim?url=http://cdn.com/upload-838364.jpg

- example.com/api/trash-spam?url=http://cdn.com/12345.png

- example.com/api/review-flag?url=http://cdn.com/jdhdgdgfs.png

If You Have A Hammer, Not Everyone Is A Nail

Technology is a tool. It’s fallible. In artistic ventures, you’re always looking for the anomaly. The odd one out who defies the casting description, but you have to hire on the basis of their performance or the way they make you re-imagine things in a more interesting way, is the ultimate goal.

Facial recognition is not going to allow you to filter out Indian people, or create a list of the most attractive applicants. However, it can help automate the triage process to reduce the noise. Soon the limits are going to be expanded by detection of hair length/color, eye color, height, and more — which will allow us to welcome the volume, not rear back in horror.

Think of it as you do your “junk mail” folder. You need to check it regularly in case you miss something or someone important.

If only if we could teach machines to detect whether someone can act.